Years ago, I witnessed a thought experiment discussed by two old friends. One was an astrophysicist, the other a data scientist; neither was sober. The thought experiment: If you fed all relevant data into a computer, could the machine rediscover the laws of planetary motion by matching time periods, distances from the sun, velocities, et cetera, without human guidance?

I remembered that conversation when the self-taught Artificial Intelligence program, Alpha Zero, thrashed one of the world’s strongest chess engines, Stockfish 8. There were several amazing things about that match.

Alpha Zero won by an impressive score of 28-0 in a 100-game match against a program, which would beat a human world champion by even wider margins. Moreover, Alpha played in a style never seen before. The third amazing thing is, of course, that Alpha is entirely self-taught.

Deep Mind’s Alpha AI runs on specially crafted chips. It’s relevant to note that Deep Mind was founded by chess and poker prodigy, Demis Hassabis, who was beating grandmasters as a teenager before he meandered off into neurophysics. Hassabis did his PhD on the neural processes underlying memory.

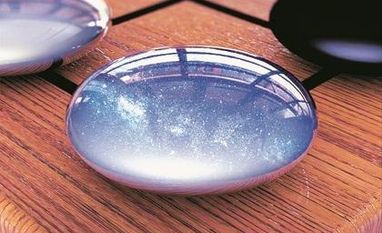

AlphaGo Zero, developed by DeepMind

Hassabis retains an abiding interest in games. Most programmers, starting with giants like Alan Turing and Claude Shannon, have experimented with chess and Go. These games have simple rules, yet, generate huge complexity. It is also easy to judge if a program is successful — it will beat strong players.

By the mid-1990s, the high-end chess programs matched world champions in skill. In 1997, the world champion Garry Kasparov lost a match to an IBM program, Deep Blue, which ran on specially designed chips. By the early 2000s, cheap or free programs (Stockfish is free and open-source) running on home PCs beat world champions, easily.

There are 20 possible opening moves in a chess game. After the first move by both sides, there may be 400 positions, By move 3, there may be 121 million. There are a minimum of 10^120 possible chess games, according to Claude Shannon. That number exceeds the number of atoms in the universe. It is impossible even for a very fast computer to “solve” chess.

Computers use a combination of human knowledge, ingenious programming and brute force to play. Strong human players use heuristics (rules of thumb) to find good moves and assess positions by counting material, relative space control, king safety, pawn structure, mobility, et cetera. Those rules are taught to programs. Then it is fed millions of recorded games. An opening “book” of moves derived from practice is inputted.

The program uses brute force combined to a technique called “minimax”, or “alpha-beta”, to select good moves. Say, there are 30 legal moves in a given position and 30 legal responses to each. The program will work through these 900 possibilities and apply the heuristics it has been programmed with, to rank them. It will pick the move that gives maximum advantage (or minimum disadvantage) and assume that the opponent plays the move that gives the opponent maximum advantage (minimum disadvantage) in response. It will go deeper and deeper, as time and processing power permits. Running on an average quad-core PC, Stockfish crunches 4 million positions per second.

Alpha Zero taught itself chess, without any human heuristics, without recorded games, or an opening book, by playing against itself. This “reinforcement learning” process seems to have rediscovered all the heuristics humans learnt over centuries, and some more that humans don’t know. It analyses “only” 80,000 positions per second.

Earlier, Alpha Go Zero did this with Go, which has far more complexity than chess. There are 361 opening moves. The total number of possible games is more than 10^170. Alpha Go Zero taught itself the game and beat its “earlier sibling”, Alpha Go , which was programmed with human heuristics, recorded games, et cetera. Alpha Go had beaten Go world champions in 2016, the first time a program did this.

Now, if an AI can teach itself, it is conceivable that it would be able to make big breakthroughs in understanding processes like protein-folding and DNA combinations. These natural processes work by simple rules and generate mathematical complexity human beings cannot handle. An AI may even be able to emulate Johannes Kepler and rediscover the laws of planetary motion from scratch. It might even be able to take the masses of astronomical data we possess and find relationships that modern astrophysicists have not found.

One problem with machine learning is that extensive human inputs are required. Humans identify images for self-driving cars to create a database; they identify cancer types for AIs doing MRI scans; they identify facial structures, buildings, geographical landmarks, et cetera, for drones. This is both an expense and time consuming. If AIs can do this on their own, the process may become more efficient.

Alpha Zero opens up these new exciting vistas. It remains to be seen where Hassabis & Co will go next and where others can apply this approach.

Unlock 30+ premium stories daily hand-picked by our editors, across devices on browser and app.

Pick your 5 favourite companies, get a daily email with all news updates on them.

Full access to our intuitive epaper - clip, save, share articles from any device; newspaper archives from 2006.

Preferential invites to Business Standard events.

Curated newsletters on markets, personal finance, policy & politics, start-ups, technology, and more.

)