We are used to partisan actors manipulating our information set through the modern digital media. Such activities are also conducted by state actors, particularly by undemocratic countries. Russia's methods around Brexit and the 2016 US elections are by now well understood, but many other similar projects have not received that level of analysis and limelight. We, in India, need to recognise that external state actors could be running such “influence operations” in India. Simplistic bans will not solve the problem.

We live in the information age, and an integral part of the information age is information warfare, the attempts by bad actors to manipulate the information set of the public. Internet-based campaigns, to intimidate or to malign, are manufactured much like crowds in many a political rally. Such campaigns are operated by political parties, private persons, and governments.

Influencing the mind of the public began with modest objectives like selling more toothpaste, but has morphed into bigger projects. The most famous influence campaigns were run by the Putin regime in 2016, into the UK on Brexit, and into the US on electing Donald Trump. Disrupting the European Union was a long-cherished goal of the Soviet foreign policy, and installing Mr Trump as the US President helped weaken America. These low-cost projects delivered good payoffs for the Putin regime.

Internet-based information manipulation is not illegal. It constitutes a new ability to translate money into a coordinated campaign that can distort the beliefs of many people. Vulnerability to manipulation is rooted in the extent to which the

misinformation appears to come from a trusted source (such as the WhatsApp uncle).

We know these things better with the benefit of hindsight, but in 2016 they were not understood. This innocence about the normal political process, which led up to a voting, was part of the reason why these influence campaigns succeeded. By now, much detail about how these campaigns work has been uncovered through the investigative process in the US and the UK. The Putin regime used front organisations such as the Internet Research Agency, which employed hundreds of people who ran coordinated social media campaigns. A prime funder of this organisation was Yevgeny Prigozhin (fbi.gov/wanted/counterintelligence/yevgeniy-viktorovich-prigozhin), who is known in recent times as the owner of the “Wagner Group,” which is fighting in Ukraine.

These campaigns have come in two kinds. Sometimes, there is a specific policy objective, such as Brexit or a Trump victory, broadly aimed at disrupting the essence of liberal democracies. Alternatively, it is about sowing chaos and confusion.

Russian trolls have simultaneously run pro-vaccine and anti-vaccine campaigns, aiming to get victims of this misinformation to hate one another, so as to drive up the level of conflict within the target society. On occasion, Russian trolls have even been able to create physical right-wing political rallies in the US.

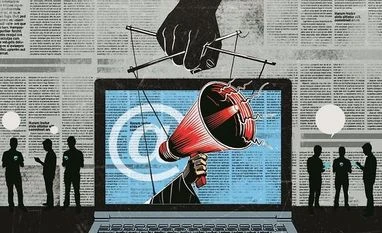

Illustration: Ajay Mohanty

In the field of cybersecurity, there is the phrase “advanced persistent threat” (APT), which is a euphemism for a state actor conducting cyberattacks. Only governments have the level of resourcing to be advanced (i.e. to bring sophistication to bear on the problem) and persistent (to chip away on a problem over long periods of time). A similar concept can usefully be applied to influence campaigns. Thousands of people are fixated on their enemies and are using the internet to defame them and to influence government officials about their enemies. State-run campaigns can rise to a greater lethality. In the future, better text generators like ChatGPT will lower the cost of markers of culture and knowledge that are betrayed in written text.

While all governments could potentially do such things, it is less likely in mature democracies. As an example, when Edward Snowden revealed the illegal surveillance program run by the National Security Agency in the US, the program was shut down.

For another example, a lot is known about the US anti-tank missile, the Javelin, as their budget process is transparent and gives details including the precise number of missiles produced. While secret influence programs can exist in mature democracies, they are likely to be smaller and less frequent. But conditions are very different in countries that are not fully free, like China and Russia. The opacity of these governments makes it possible to hide substantial expenses and organisations, and these undemocratic governments would not be rocked by disclosures of unconventional warfare undertaken through cyberattacks or influence campaigns.

We, in India, need to recognise the problem of influence campaigns, aimed at reshaping the behaviour of our state and political system. At present, these campaigns are run by local businessmen and local political parties. We should also consider it possible that foreign states run such campaigns. We can envision many kinds of influence operations which seek to obtain outcomes from the Indian political process, or behaviour by the Indian state, which weaken India. As an example, India is one of the countries in the “global South” that has been the target of Russian influence campaigns on the subject of the invasion of Ukraine.

What is to be done? It is too easy for these problems to feed into the Indian xenophobia and proclivity for arrests and bans. This path will actually help adversaries achieve the objective of weakening India. Internet-based influence campaigns are inter-disciplinary problems, and require bringing together knowledge of technology policy, foreign policy, media, and the political system. Solving these problems require commensurate expertise.

The first step lies in awareness. There was a time when Twitter or the WhatsApp uncle was taken more seriously. All of us are faring better on recognising misinformation campaigns that are in play, on being sceptical about the vast amount of material on the internet. Now we need to extend this scepticism to broaden our view about overseas authoritarian governments and their priorities in weakening India.

The writer is a researcher at XKDR Forum

Unlock 30+ premium stories daily hand-picked by our editors, across devices on browser and app.

Pick your 5 favourite companies, get a daily email with all news updates on them.

Full access to our intuitive epaper - clip, save, share articles from any device; newspaper archives from 2006.

Preferential invites to Business Standard events.

Curated newsletters on markets, personal finance, policy & politics, start-ups, technology, and more.

)