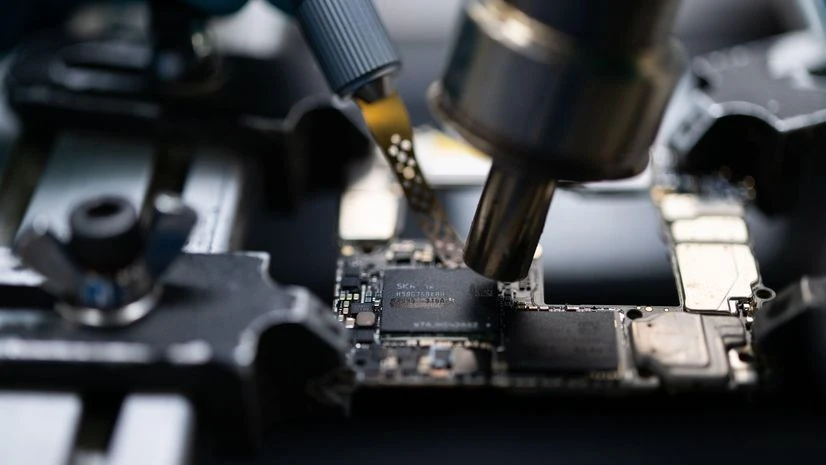

South Korea's SK Hynix said on Thursday that its high-bandwidth memory (HBM) chips used in AI chipsets were sold out for this year and almost sold out for 2025 as businesses aggressively expand artificial intelligence services.

The Nvidia supplier and the world's second-largest memory chipmaker will begin sending samples of its latest HBM chip, called the 12-layer HBM3E, in May and begin mass producing them in the third quarter.

"The HBM market is expected to continue to grow as data and (AI) model sizes increase," Chief Executive Officer Kwak Noh-Jung told a news conference. "Annual demand growth is expected to be about 60 per cent in the mid-to long-term." SK Hynix which competes with U.S. rival Micron and domestic behemoth Samsung Electronics in HBM was until March the sole supplier of HBM chips to Nvidia, according to analysts who add that major AI chip purchasers are keen to diversify their suppliers to better maintain operating margins.

Nvidia commands some 80 per cent of the AI chip market.

Micron has also said its HBM chips were sold out for 2024 and that the majority of its 2025 supply was already allocated.

It plans to provide samples for its 12-layer HBM3E chips to customers in March.

More From This Section

"As AI functions and performance are being upgraded faster than expected, customer demand for ultra-high-performance chips such as the 12-layer chips appear to be increasing faster than for 8-layer HBM3Es," said Jeff Kim, head of research at KB Securities.

Samsung Electronics, which plans to produce its HBM3E 12-layer chips in the second quarter, said this week that this year's shipments of HBM chips are expected to increase more than three-fold and it has completed supply discussions with customers. It did not elaborate further.

Last month, SK Hynix announced a $3.87 billion plan to build an advanced chip packaging plant in the U.S. state of Indiana with an HBM chip line and a 5.3 trillion won ($3.9 billion) investment in a new DRAM chip factory at home with a focus on HBMs.

Kwak said investment in HBM differed from past patterns in the memory chip industry in that capacity is being increased after making certain of demand first.

By 2028, the portion of chips made for AI, such as HBM and high-capacity DRAM modules, is expected to account for 61 per cent of all memory volume in terms of value from about 5 per cent in 2023, SK Hynix's head of AI infrastructure Justin Kim said.

Last week, SK Hynix said in a post-earnings conference call that there may be a shortage of regular memory chips for smartphones, personal computers and network servers by the year's end if demand for tech devices exceeds expectations.

(Only the headline and picture of this report may have been reworked by the Business Standard staff; the rest of the content is auto-generated from a syndicated feed.)

)